They call me the ‘Guru of Adaptive’, which is flattering but that’s it. I will try to put some light on this in the sense that ‘adaptive’ is not a new idea, not my sole idea or something that just came on the table recently. I have just been very vocal (and provocative) in the last ten years denouncing the all-out claims of the BPM-faithful that they can model a business and run it on rigid flowcharts. It may surprise you how long quite a number of academics already think that BPM has limited use. Enter adaptive processes.

The idea that a predefined flowcharted processes needs to be continuously adapted by users or the software has been there since before 1995. Also Keith Swenson published some papers on collaborative processes around that time. I started to develop the Papyrus Platform in 1997 with the premise of a fully adaptive application. Before that also formal research took place by for example by Manfred Reichert and Peter Dadam and others at the University of Ulm, as well as Christian W. Günther and Wil M.P. van der Aalst at the Eindhoven University of Technology. While Reichert and Dadam focused on user-interactive flowcharts with their ADEPT system, Günther and Aalst searched for the holy grail in process mining from enactment logs.

ADEPT1 from 1998 mainly focused on ad-hoc flexibility and distributed execution of processes, plus process schema evolution, which allows for automatic propagation of process changes to already running instances. With ADEPT2, processes are modelled by applying high-level change operations starting from scratch. These change operations with pre- and post-conditions try to ensure the structural correctness of the resulting process graph. While that enables syntax-driven modeling and guidance to the process designer, it does limit (by design) the functionality of the result. ADEPT2 also uses a formally independent data flow. Data exchange between activities happens by global process variables stored as different versions of data objects. That leads however to different versions of a data within AND-Split and XOR-Join. While that can cause issues, it is required for correct rollback of process instances when activities fail. ADEPT2 offers a usable solution for process flow improvement, but it is built on a purely academic process theory that leaves many aspects of real-world business needs – such as resources – in the dust.

Concepts of ADEPT2 can be found in some BPMN based systems as well as in Papyrus, particularly the object data model and the model preserving approach. I see however a process as state/event driven by its data objects, content and activities and the flow being just one way to look at it. I do not see the need for a formally error free process, because exceptions can happen at any point in time and their resolution is a normal activity, typically executed by a superuser or expert. They could immediately improve the process template if necessary. I also see rules as essential to map controls across multiple processes, while ADEPT2 only uses normal decision gateways. In ADEPT2 each task will have a fixed state engine, while I see a state engines as a freely definable object property. That points to another major difference in my research to the one of the ADEPT2 team, because I built the data elements and the process execution ON TOP of the same freely definable object model. That means further that all data and process objects have a visible existence OUTSIDE the process and can for example be linked into several cases and their state changes will influence multiple task states. Object integrity can always be ensured through transaction locks. In Papyrus that is also possible across distributed processes and servers.

So what about the ideas of process mining as pursued by Christian W. Günther and Wil M.P. van der Aalst at the Eindhoven University of Technology? Is that a better approach because it considers adaptiveness not from a user design perspective but in terms of mining information from already executed processes?

The first point is: Where do you mine from? If you perform process mining from a normal BPM system there is little to be mined as processes are executed as-is with a few decision taken. Aalst pursued also a direction to integrate ADPET2 with process mining because there at least the process can be changed by the user. The idea was that process mining can deliver information about how process models need to be changed, while adaptive process provides the tools to safely make changes. Process mining moved along for a while, with Aalst admitting later that “the problem lies with process mining assumptions that do not hold in less-structured, real-life environments, and thus ultimately make process mining fail there”. So the problem is the same one as with ADEPT2. The real world is simply not a university test lab.

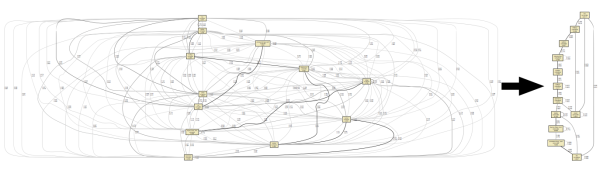

To improve the process mining, it would have to happen from logs of user interaction in freely collaborative environments, such as email or case management. However, allowing users to work in less restrictive environments will lead to more diverse behavior, leading to “spaghetti” process models that — while an accurate picture of reality in principle — do not allow meaningful abstraction and are useless to process analysts. The graph below has been mined from machine test logs using the Heuristics Miner, showing events as activity nodes in the process model. As everything is connected it is impossible to interpret a process flow direction or any cause and effect dependencies. That led to the concept of the Fuzzy Miner, which combines analyzing the significance and correlation of graph elements to achieve abstraction and aggregation. Fuzzy Miner uses a very flexible algorithm and the tuning can be tedious to improve results. Aalst says “that process mining, in order to become more meaningful, and to become applicable in a wider array of practical settings, needs to address the problems it has with unstructured processes.” Does not yet sound like real world benefits will be coming our way soon.

I chose a completely different approach to adaptiveness and to process mining because I approached it from a human perspective. Who knows how to execute a certain process step? The actor! He should be able to create and change anything in principle and I need to learn from his actions. The business user has however no notion of flowcharts. He just works with process resources, such as content. First, our process and data model is built using an objects meta-model. Therefore ANYTHING can be adapted at runtime or in the template by any authorized user. For process mining, the Papyrus User-Trained Agent does not look at process logs of enactment. It performs pattern recognition on the data objects and their relationships of the complete state space at the time an action is performed by the user. The UTA analyzes what elements of the pattern are relevant for its subsequent repeated actions. That will include information about previously executed steps and their results. Because the data model of the process execution is fully accessible in real-time, the pattern recognition is performed in REAL-TIME each time a process relevant action is performed by a user. To utilize the gathered pattern knowledge, each time a change is triggered in the state space observed by the agent, it tries to identify a matching pattern and if it does so, recommends that action to the user. The larger plan beyond that action is not relevant to the UTA. A sequence of recognized patterns will create a fully executable process, while allowing the process knowledge to be shared between multiple and differing executions. If the actor performs that recommended action the confidence level of that recommendation is raised, if not the pattern is analyzed for differences to the recommended action. If it is the same, the confidence level is lowered. One or more of the most confident recommendations can be presented to the actor to chose. UTAs can run in parallel and observe all kinds of state-spaces for either specific roles, queues or arbitrary groups of objects. The UTA does not only learn process execution knowledge, but also if a prospect is considered creditworthy or if his behavior seems fraudulent. The UTA does not abstract, deduct or induct rules. It builds on the premise that most of the relevant data that cause a user to perform the current action are in the state space and thus performs a transductive pattern recognition transfer from actor to agent. That is the content of my 2007 UTA patent in short.

We are now entering the BUZZWORD BINGO stage of ADAPTIVE process with vendors left and right claiming to be adaptive in nature, often enough while name-dropping all other attributes as well (agile, flexible, dynamic, social). Adaptive process has to involve improvement feedback from the enactment into the process template, either by the actors or by some software mechanism. I propose that in the real world, the feedback and improvement must not just be about the process steps but about any entity or resource in the process/case. The theoretical adaptive approach only focuses on the flowchart improvement and that is its downfall, just like with orthodox BPM vendors claiming to be adaptive. A truly adaptive solution must enable process creation from scratch and allow it to be turned into a fully automated process with backend integration without needing upfront flow analysis. It must be data modeled from a business architecture, goal-driven, support state-event mechanisms, embed business rules, provide inbound and outbound content and empower the business user to create the user interface that is perfect for the job.

Like with sex, there is a substantial difference between speaking about it and actually doing it. At least for adaptive process there is nothing immoral about testing if someone is just boasting or really knows what he is doing. So clearly, you must try before you buy!

[…] This post was mentioned on Twitter by Lee Dallas and Lee Dallas, Max J. Pucher. Max J. Pucher said: Adaptive Process: Theory and Reality – http://wp.me/pd9ls-eB #ACM #BPM #CRM #ECM […]

LikeLike

Congratulation to this post! And I like your UTAs. It seems to me a valid approach to solve the unpredictable processes. I have used some of your interpretations within my today post (sorry for being in German).

LikeLike

v.d. Aalst’s process mining works perfect when all transactions share a same case -id.

However in most system landscapes (and even in an integrated SAP environment), v.d. Aalst’s process mining fails, because in the various data-store different (kind of) case-id’s are used. In order to make it work, one first have to relate the case-id’s (manually?) across the systems..

LikeLike

Thanks, Mic. Could you elaborate? What does works perfect mean? One of the biggest issues is that a case is not only built from transitions but also has to take the variable data of each case into account. So it is not just case IDs that have to be matched but also all the data variable names. It is not just case routing and assignment that has to be identified. Does the Aalst mining also abstract business rules related to the business data?

LikeLike

[…] Pucher touched on this recently in “Adaptive Process: Theory and Reality” and that is the best source because he really is the “Guru of Adaptive”. To […]

LikeLike

I agree that “The real world is simply not a university test lab” but good things do come from them especially ProM from Eindhoven’s labs.

The real world is full of legacy systems and event logs. It is full of undocumented systems with no procedures or process diagrams.

I don’t agree that it “Does not yet sound like real world benefits will be coming our way soon” .

Many of us use ProM to discover Legacy systems. Often if the event logs are not obvious they can be found in other ways – IBM journals – it just takes imagination.

One of the other comments refers to Does the “Aalst mining also abstract business rules related to the business data?”. Most certainly. You can unravel intertwined systems (where there are no clear boundaries) using semantics.

Just because a capability does not solve every problem doesn’t mean it should be dismissed.

LikeLike

George, thanks for the comment. I am in no way discounting various process mining techniques. In principle we do the same thing. I am asking if these techniques will produce real world benefits in the sense that they can be used by business users without ‘imaginative’ knowledge engineering. I wonder how semantics would allow you to build business rules from data. Please elaborate. I am focused on solving the problem for the business user. A partial solution just creates more complexity in the end. We need to find simplicity to finally ensure that businesses are not held back by a lack of IT functionality.

LikeLike

Thanks Max . Sorry there are two separate points.

Firstly, if you have mined the appropriate attributes with an event log then you can reveal business rules quite easily. Once you have discovered the workflow using a tool like ProM you can use the Decision Point Miner to reveal the details.

ProM will show you that, for example, the workflow goes down one path when the item type = X and the customer type = Z where these two types where captured attributes. It will go down another path when the attributes are different.

Secondly, using ontologies you can solve the problems of heterogeneity and complexity (as you mention) of large systems.

This can be done for example, with an ERP system that has lots of activities at the lowest level and which are described using codes. You can abstract those two meaningful descriptions at the next level up then group these by more meaningful names at the top level.

For example, all the purchasing activities are grouped as “Purchasing”. So you move from 300+ codes to 20+ high level “activities in the workflow.

I have detailed this in my blog http://businessprocessmining.blogspot.com/

Hope this helps!

LikeLike

George, thanks. That explains it. It also illustrates my point that ProM is focused on simple process flows and decision points. A process is however in my mind a dynamic structure of many different entities whose information content is typically not exposed and can’t be mined from the systems that carry them in real-time. These entities further change continuously and it must be in the hand of the business user to make those changes.

My approach is to let the business user define data, content, rules (where defineable), participants, goals and user interface and let the User-Trained Agent discover the patterns at ACTION points. That produces ‘actionable knowledge’ related to all entities of a process without the need for creating ontologies, analysing semantics, or abstracting rules. As teh UTA is an iterative function, the process evolves as participants make changes and the agent makes suggestions to participants as to the typical decisions taken. That is relates to ANY action taken and not just routing.

The key distinction is that there is no independent mining but the agent is exposed to all attributes of all entities in the statespace at all times. It performs an automatic relevancy weighting of attributes that are always the same or always different in decision patterns. I do not need to make a decision as to which are the ‘appropriate’ attributes that I have to mine.

Thanks again for the comment.

LikeLike

Process mining is not focused on “simple process flows and decision points”. It also has capabilities regarding human and resource performance as well as audit capabilities. The semantic capabilities are also very powerful. Unless you have used these capabilities you are not really in a position to dismiss them.

Being provocative is fine if it serves a purpose. Challenging other methodologies can be useful too but does it take you anywhere?

Perhaps instead look for the benefits of these ‘other’ methodolgies & technologies?

I am looking at ACM to see how it can compliment what I already use and understand.

LikeLike

Goerge, thanks again. I can challenge anything I feel that does not serve a purpose. Mining processes that are already reducing business resilience is to me utterly useless.

I am sorry that I have to correct you. Process Mining in the scientific meaning of the term is not auditing, monitoring, or other general data collection about processes. I am pointing out that the described process mining concepts do not collect information about metadata models, content structure, general process patterns, and user behavior and abstract those into more generic process information. This misuse of the term ‘process mining’ for monitoring shows that it is popular in BPM (as in IT in general) to bend the meaning of clever sounding terms for a marketing purpose.

I have used and worked with and moreover scientifically studied these methodologies and technologies for a long time. I am done with treading lightly. There is so much nonsense being presented that someone at least has to stand up and speak out.

You do it your way and I do it mine. I totally agree with you that I am not making my life easier. But I say what I have to say if it is my belief.

‘I rather be true to myself and hated than a popular coward.’ Robert B. Laughlin, Nobel Laureate

LikeLike